LLMs vs LMMs vs LAMs - What's the difference and will 2024 be the year of the "Universal LAM"?

AI architecture is evolving rapidly: last year's models are outdated-this years are incredible-but next year's promise to be astonishing! How will ChatGPT evolve to stay relevant when AI is everywhere

Hi folks, in this week’s newsletter, I’m exploring LAMs, what they are and how the emergence of a “Universal LAM” could be a game-changer for business productivity.

Diving deep into the AI world, we've seen Large Language Models (LLMs) rule the last year, and now Large Multimodal Models (LMMs), like GPT-4V, are making waves.

But what's next?

From intriguing acronyms to game-changing predictions, in this article, I take a peek into the future of AI in business.

Will LAMs be the next big disruptor?

And how will AI tech giants like OpenAI, Google and Microsoft adapt to this new wave?

Get a glimpse into what the future of AI might hold.

(And find out what a “LAM” is!)

Let’s dive in! 🚀🔍

If the past 12 months have been characterised by large language models, generating copious drafts of blogs, ads and even whole novels.

And, the current trend is for multimodal AI, where models can see, hear and speak using text, audio and images.

What advances will the next 12 months hold?

Well, first, generative AI and multimodality are not going away.

They’re only going to get better.

As Sam Altman, CEO of OpenAI, is keen to point out,

“This is worst that AI is ever going to be…”

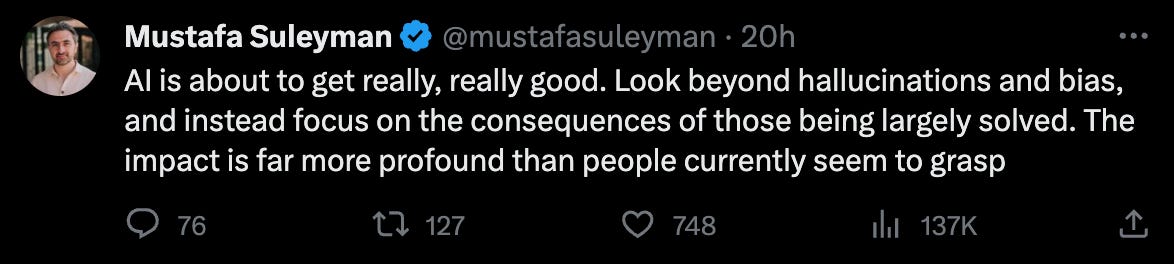

And, if Mustafa Suleyman, co-founder of Inflection AI, recent X/Twitter post is to be believed, things like model hallucinations and bias may be close to being solved,

But, what else is coming down the AI tracks in the business world?

In last week’s newsletter, I discussed the “State of AI Review” by Nathan Benaich and the team, at Air Street Capital.

In the Review, which covers the main trends, themes and progress made in the AI space over the previous 12 months, they also make 10 predictions for the next 12 months.

Whilst these are all well and good, one of the predictions I’d like to add to this list is the rise of models with “action” capability in 2024. What I’m calling a LAM.

But, what is a LAM?

Let’s get the terminology clear, as it can be a bit confusing.

LLM, LMM, LAM … WTF!!?

I’ve you’ve been reading my newsletter for any length of time, you’ll know by now that I love a good TLA (Three Letter Acronym), so let’s explain what they mean:

Large Language Models (LLMs) focus on processing text, then understanding, and generating human-like text output. An example is GPT-3.5.

Large Multimodal Models (LMMs) are trained on multiple types of data (e.g., text, images, audio) and can understand and generate text from these inputs. An example is GPT-4V.

Keep reading with a 7-day free trial

Subscribe to BotZilla AI Newsletter to keep reading this post and get 7 days of free access to the full post archives.