From AI to Armageddon: Steps to Prevent a Digital Disaster

AI as an existential threat to humanity was in the news again, with signatories from the world's leading AI experts. Let's take an in-depth look at the risks and implications.

Hey folks, in this week’s newsletter, I’m discussing AI risks and alignment, and commenting on an open letter by yet another AI safety organisation for steps to mitigate “the risk of extinction from AI” before it’s too late.

Also, I give my take on the difference between intelligence, consciousness and sentience, and discuss how an AI might be able to simulate consciousness by employing certain “system-2” techniques, as defined by Daniel Kahneman in his book “Thinking Fast and Slow”.

Do you ever wake up a little dazed and confused from a deep sleep and think,

“I’m sure I’ve got something unpleasant to worry about…”

And then, as your conscious mind slowly returns, it hits you.

Maybe it’s anxiety about an upcoming exam.

Or an important meeting you’re dreading that, in the bliss of your deep sleep, you’ve completely forgotten about.

Well, that’s kind of how I’ve been feeling in 2023 with respect to AI.

Every morning I wake up thinking,

“I don’t quite feel right. There’s something on my mind…”

And then, boom!

It hits me.

“OH YEAH, AI IS GONNA KILL US ALL!”

Or is it?

Well, that’s what I want to discuss in today’s newsletter.

Certainly, an increasing number of the world’s top AI researchers seem to think so.

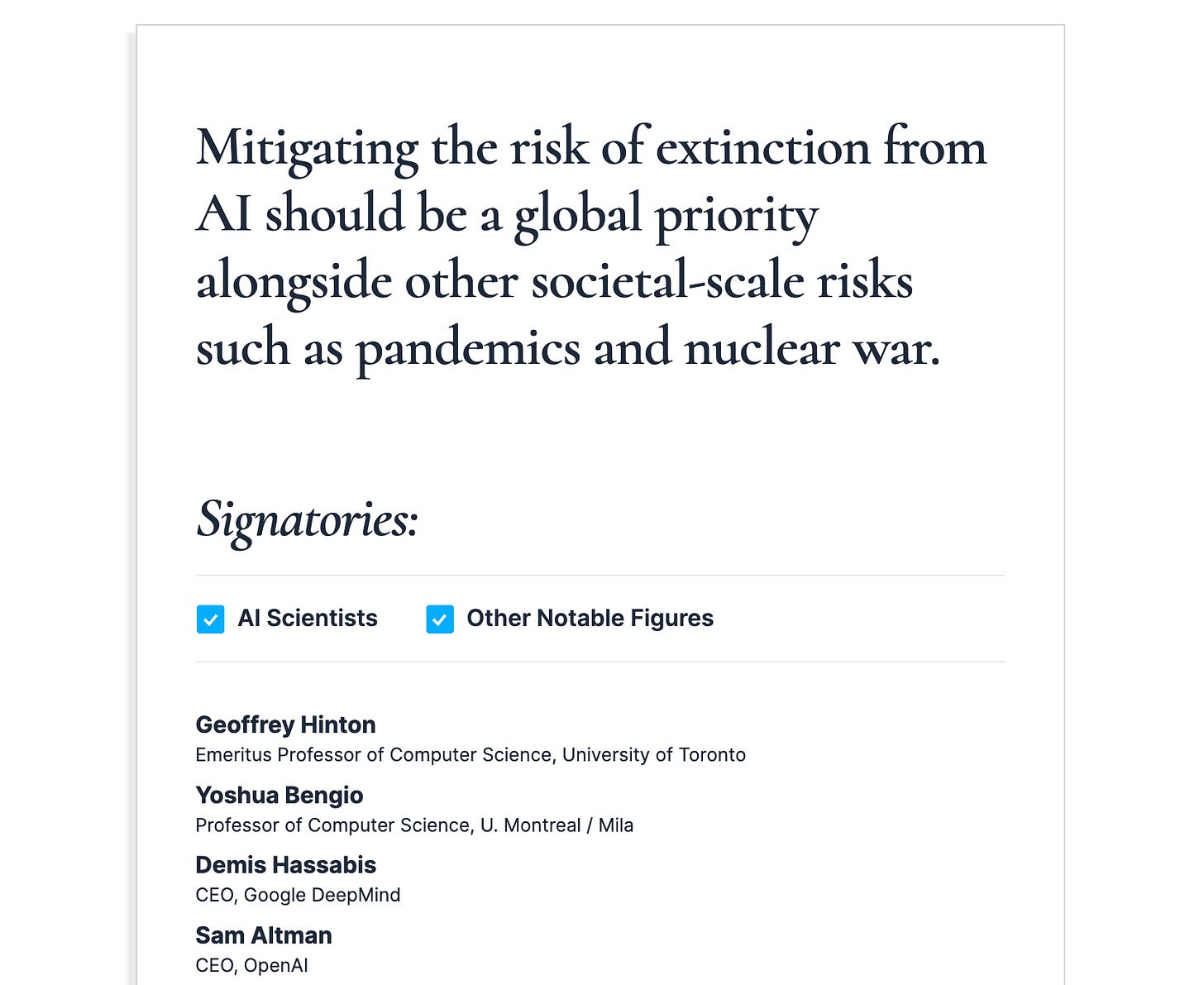

The latest open letter, from what appears to be an otherwise little-known (outside of AI circles at least) “AI safety” organisation, has received global media attention regarding the “risk of extinction” from AI by getting some of the world’s most prominent AI experts to sign it.

And it’s not that AI might just kill some proportion of the human race.

Like guns, bombs, earthquakes and even cars do.

They are talking about an actual “extinction” event, like a large asteroid, or all-out global nuclear war.

That is indeed a bold claim.

In case you didn’t see it, here’s the letter from the Center for AI Risk (CAIS),

Short, and (not so) sweet.

This time, the letter is also signed by Sam Altman, the CEO of OpenAI, the company that created ChatGPT.

Whilst I’m totally for AI regulation, I can’t help thinking there’s something else motivating the creators of these letters, as well as some of the signatories.

For a start, the people signing the letter are mostly the actual same people who are creating the so-called “doomsday AI” systems!

Is it too cynical to think that by encouraging heavy regulation in the field of AI, they believe it will allow them to maintain a strong competitive advantage over new entrants?

It’s possible.

Also, is it a coincidence that several of the organisations tackling AI risk appear to be driven by the same philosophies of Longtermism and Effective Altruism?

Cynical opinions aside for a moment, what I do agree with wholeheartedly is that AI needs to be regulated.

It's undeniable that large language models like ChatGPT, Google Bard, and others are powerful tools, even today.

And, their capabilities are expected to expand further as these models undergo continuous refinement in the future.

I’m also cognisant that I’m not working at the very cutting edge of AI research as some of the signatories are, so they may well be privy to information and capability well beyond what we see, even in today’s most powerful, public models, like GPT-4.

So let’s start by reviewing the eight AI risks identified by CAIS.

The eight AI risks outlined by CAIS

Keep reading with a 7-day free trial

Subscribe to BotZilla AI Newsletter to keep reading this post and get 7 days of free access to the full post archives.