Digging Deeper Into Chatbot Development Using LangChain And ActiveLoop

There comes a time when there's no other option than to write code. Thankfully, there are some great frameworks and tools to help you out.

Hey folks, in this week’s newsletter, I’m continuing my journey into chatbot tech, but dipping deeper into the code.

As an aside, if you know someone techie who might be interested in getting into chatbot development, but doesn’t know where to start, feel free to share.

I’m covering LangChain, the defacto Python and Javascript framework for developing applications powered by language models like OpenAI’s GPT-4, Cohere, Anthropic’s Claude and Replicate, to name a few.

Most notably, LangChain provides access to Hugging Face Hub and Hugging Face Local Pipelines with more than 120K models and 20k datasets available, that can be easily incorporated into your chatbot app and hosted either in the cloud or locally on your own infrastructure.

I’ll also touch on some of the frameworks that can help you get your bot up and running super quick for testing and production purposes.

It’s going to be a ride!

Let’s dive in.

I want to start by stating, I’m not a professional programmer.

At least not any more.

The last time I programmed for cash was, well, let’s just say, “a while” ago.

That’s not to say I don’t dabble from time to time, mostly in Python and Javascript, which, as luck would have it, are the two most important languages for developing full-stack chatbots.

But, the thing about the new AI development paradigm is that you can achieve so much without hardly coding at all, as I wrote last week.

For example,

Prompting, the way we communicate with text-based language models like GPT-4, has been called “programming in prose”, and, even more prosaically, “AI whispering”. Both send a tingle down my spine just contemplating it.

We can prompt models not only through apps like ChatGPT but also from within the actual code of AI-enabled apps.

Prompt-based development is making the traditional machine learning development lifecycle much, much faster.

Like exponentially faster compared to what it was a few years ago.

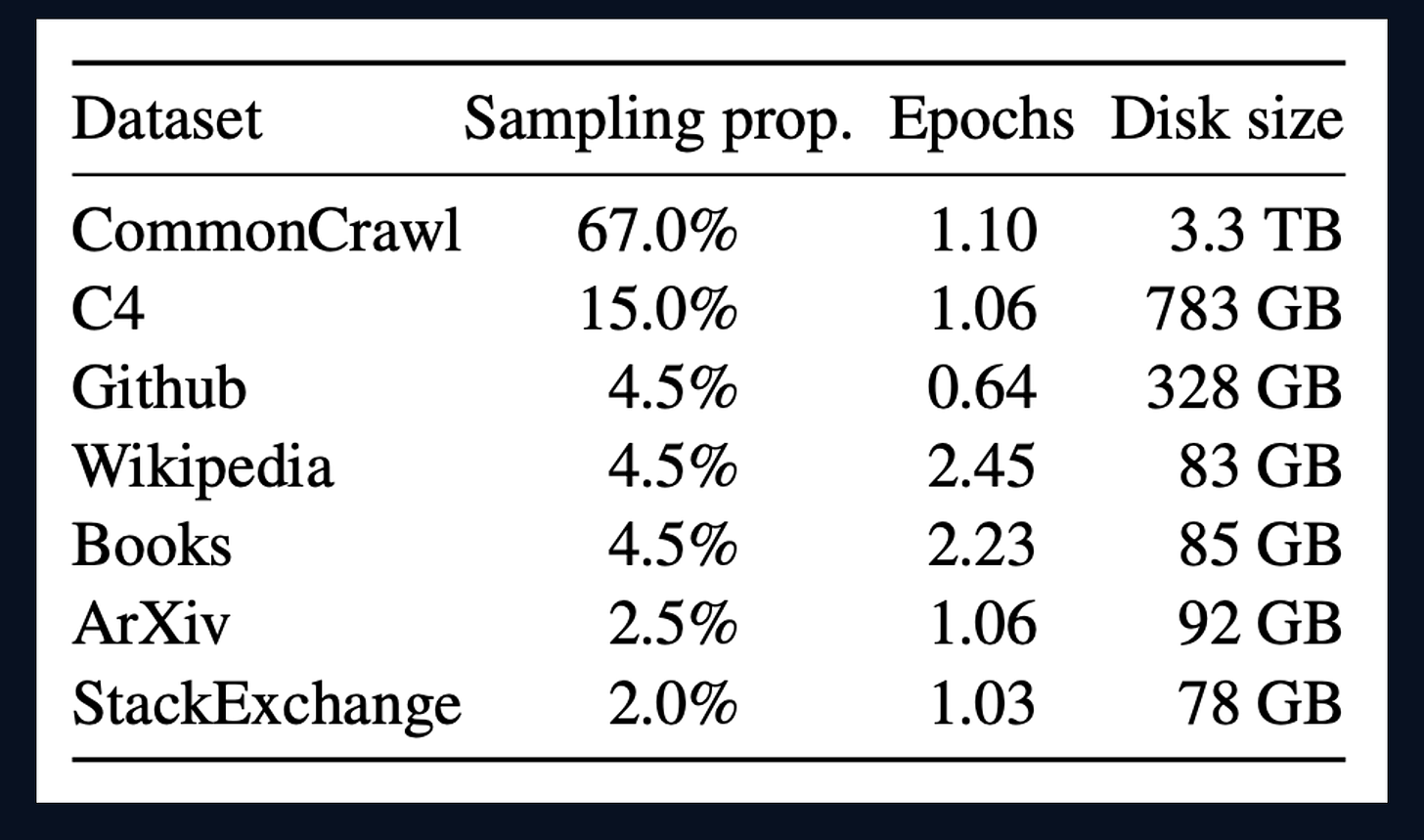

It used to be that to deploy a model from scratch, you had to pre-train it with a huge corpus of data from the web, books, etc. and then fine-tune it further for the specific use case or domain you wanted.

This process not only takes a long time, but requires people with highly specialised deep learning skills, and not to mention, it costs a fortune too.

For example,

In Meta’s recent open-source LLaMA model, the model pre-training phase took 21 days on 2048 x A100 NVidia GPUs and cost a staggering $5M. And that doesn’t include time and costs for further model fine-tuning and RLHF (reinforcement learning with human feedback).

These days, models like GPT-4 are so powerful and easily accessed as a service from the cloud, that few-shot or even zero-shot prompting can be enough to get the model in the right “mood” to provide accurate responses.

When combined with a vector database containing domain or organisation-specific data and documents, these models can be used as “reasoning engines” to put semantically sourced search data into a natural language context for human consumption.

Having said that, if you really want to get the most from language models, whether for your chatbot or some other AI-enabled app, you need to hit the metal - “the metal” being code in this instance.

To do that, you need to go above and beyond the “traditional” role of prompt engineer to include skills in coding and infrastructure.

Enter the AI Engineer ← New Role

There’s been a recent trend to broaden and re-imagine the role of “prompt engineer” as “AI engineer”.

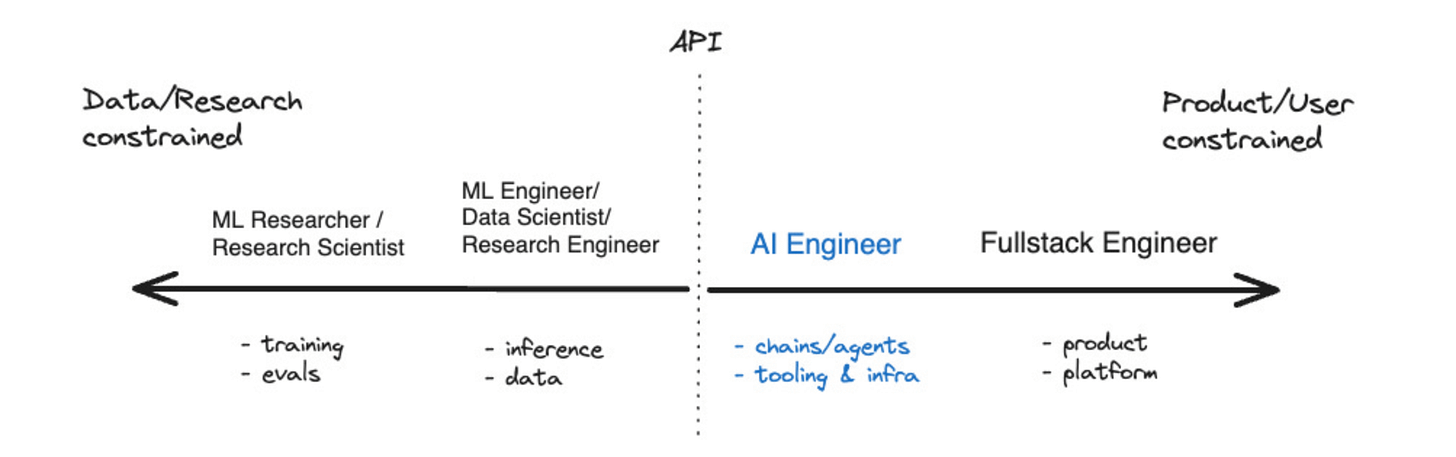

A good article to read if you’re interested in learning more about this movement is by SWSX. Here’s a diagram by SWSX, showing how AI Engineer fits into a more traditional AI development team,

This role can include everything from problem analysis, and prompt writing in natural language, to writing the backend code to make the calls to a language model to implement a chatbot or agent.

The role sits somewhere between a machine learning engineer and a more traditional full-stack web developer that handles the front-end and overall platform architecture.

Keep reading with a 7-day free trial

Subscribe to BotZilla AI Newsletter to keep reading this post and get 7 days of free access to the full post archives.