ChatGPT, Bard, Bing and More: What's New in LLMs in 2023 So Far?

It's only March, but already so much has happened!

AI models based on Transformer architecture, like ChatGPT, are rapidly advancing, making it challenging to keep track of all the latest developments.

In this week's newsletter, I'll be updating you on some of the big announcements around LLM models that have caught my attention in 2023 so far.

“AI as a Service”

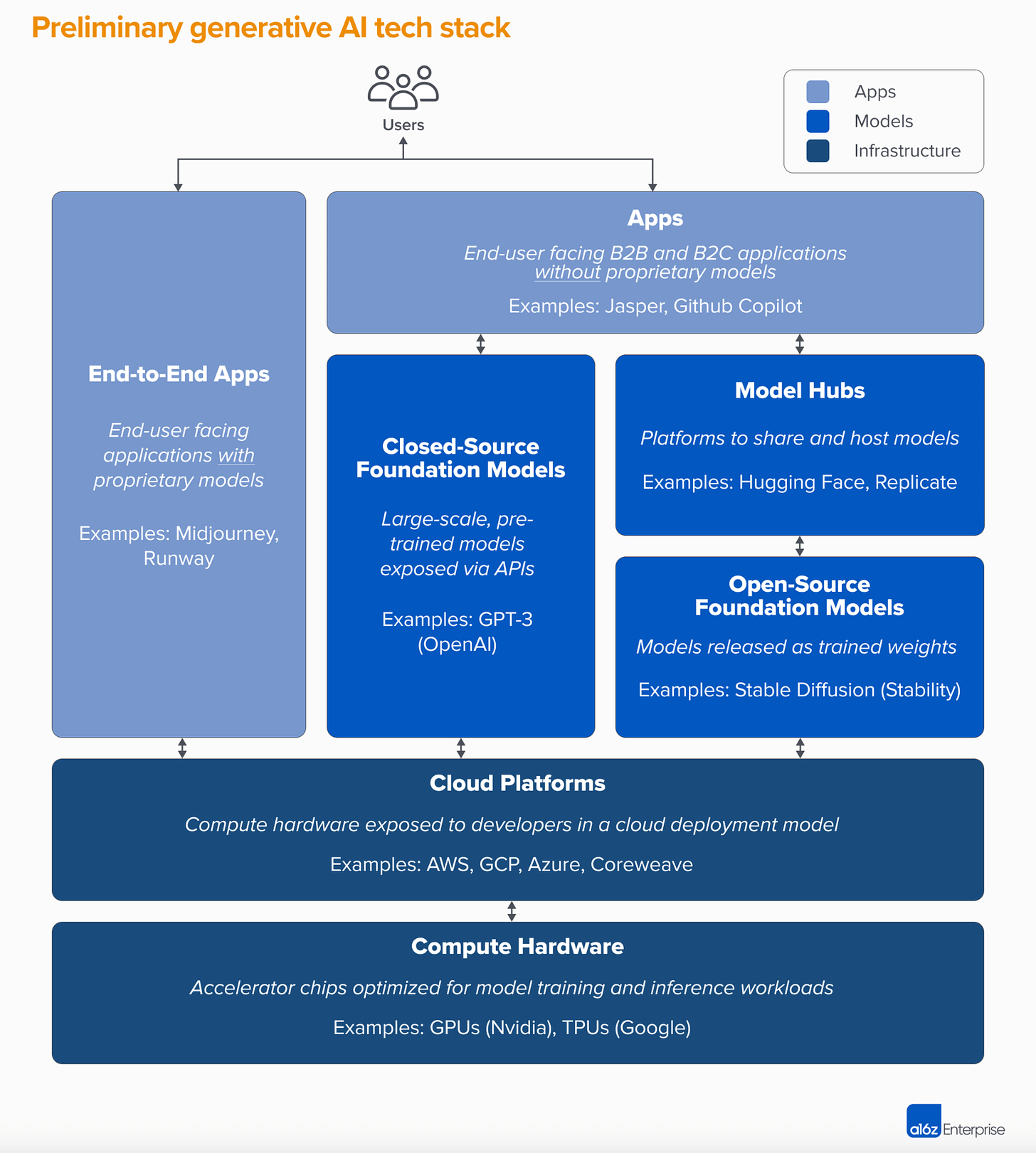

Many people, including executives of big companies and wannabe AI entrepreneurs, have come to realise that we are now in an era of "AI as a Service" - especially for those seeking to make use of generative AI models like ChatGPT for natural language processing tasks.

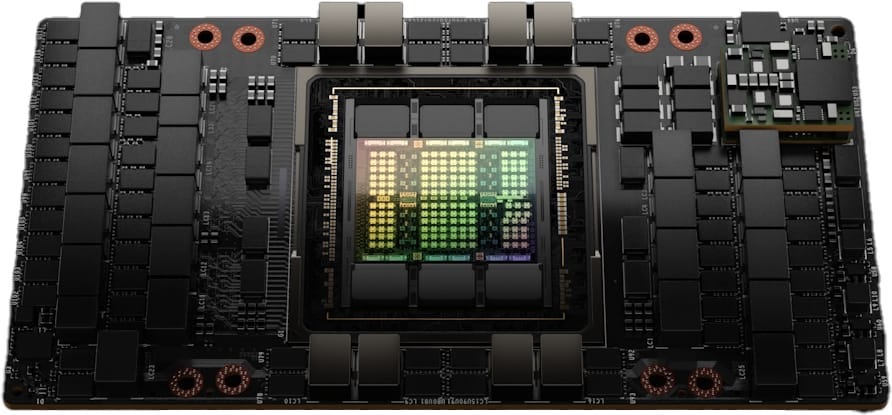

Currently, the processing requirements for LLM training and AI inference (that’s when it’s processing users’ queries at runtime) of models like ChatGPT are immense, and only the most well-resourced tech companies or venture capital-backed unicorn startups are able to sustain the cost.

The largest LLMs require thousands of GPUs for training their AI models (see below the alleged leaked research paper from Morgan Stanley regarding GPT 5 training), and even then, it may take many months.

Furthermore, at runtime, once the training has been completed, during what’s known as “AI inference”, aka answering user’s questions, a further huge number of GPUs are required to handle the multiple simultaneous queries in parallel.

As a result, an increasing number of startups and larger organisations are utilising cloud-hosted AI via “APIs” (Application Programming Interfaces) offered by companies such as OpenAI, Microsoft, and Cohere.

These APIs provide app companies with cutting-edge natural language processing and comprehension capabilities that allow them to build a new class of AI-enabled tools for their clients and end users that they have never been able to offer before.

Any company handling significant amounts of text, images, code or audio data (soon video, too) has the potential to benefit from transformer models. Stay tuned for major developments in industries such as law, media, and marketing in the coming months as new GPT models are released.

OpenAI news

The hype around OpenAI and ChatGPT since its release at the end of November 2022 has been unprecedented. ChatGPT reached 100 million unique users by end of January 2023, adoption faster than any other tech product in history, proving that whatever critics say about LLMs, it’s the ultimate product-market fit.

ChatGPT reached 100 million unique users by end of January 2023, adoption faster than any other tech product in history, proving that whatever critics say about LLMs, it’s the ultimate product-market fit.

However, the model powering ChatGPT is quite old by today’s standards, with training cut-off around June-September 2021. Since then, technology and models have advanced rapidly.

Preparing for AGI “and beyond”

Sam Altman (OpenAI CEO) released a document regarding planning for AGI (Artificial General Intelligence) “and beyond”. However, what “AGI” and “beyond” mean in this context is anyone’s guess!

Keep reading with a 7-day free trial

Subscribe to BotZilla AI Newsletter to keep reading this post and get 7 days of free access to the full post archives.